Table of Contents Show

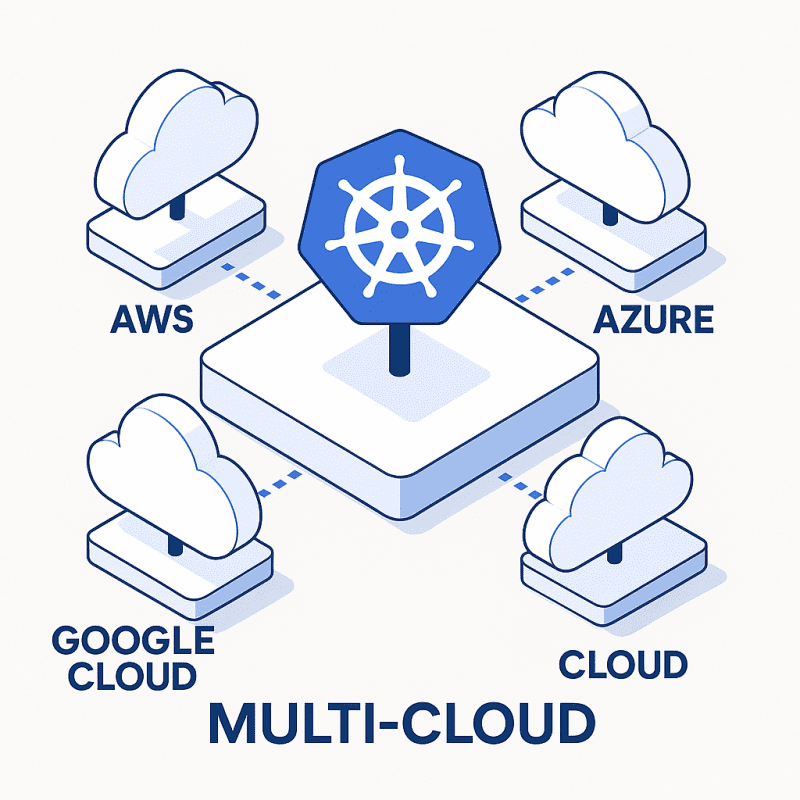

As Kubernetes continues to be at the forefront of cloud-native infrastructure, enterprises are moving workloads across multiple cloud providers. For cost savings, high availability, compliance, and agility, a multi-cloud strategy for Kubernetes can be a compelling strategy. However, running Kubernetes clusters on multiple cloud environments adds a new element of complexity that requires thorough planning and solid tooling.

In this post, we’ll discuss the fundamental issues of using Kubernetes within a multi-cloud environment and present practical strategies for streamlining and unifying its operations.

Key Challenges Facing Multi-Cloud Kubernetes Environments

1. Disjointed Cluster Administration

Each cloud provider has its own interface and set of Kubernetes management tools. This typically results in:

- A separate dashboard, CLI utility, and API

- Duplicate efforts to standardize configurations

- Siloed cluster visibility

Keeping operations consistent over these environments is a major challenge for platform teams.

2. Inconsistent Networking Models

Networking is done differently between cloud providers. This affects:

- Service discovery between clusters

- Load balancer behavior and ingress configurations

- Network Policy Enforcement and IP Management

Without proper design, cross-cluster communication and routing during failover can be unreliable and insecure.

3. Security and Compliance Fragmentation

Security practices must be uniformly imposed across clouds, but native identity and access management (IAM), logging, and secrets management can differ. This exposes gaps in:

- Role-Based Access Control (RBAC)

- Audit trails

- Policy enforcement

4. Limited Observability and Monitoring

Cloud providers typically provide their own logging and monitoring facilities, but aggregating insights across different environments is challenging. This causes:

- Partial visibility during outages

- Duplicate alerts and tool sprawl

- Complex troubleshooting procedures

Kubernetes as a Service (KaaS) is fully supported by leading cloud providers EKS on AWS, GKE on Google Cloud, and AKS on Azure. These offerings encapsulate most of the operational complexity that goes into running Kubernetes infrastructure.

Strategies for Unified Management

To counter these issues, effective multi-cloud Kubernetes operations must support a cloud-agnostic strategy for management, security, and observability.

Centralized Cluster Management

Implement platforms such as Rancher, VMware Tanzu, or Red Hat OpenShift to control and manage multiple clustersfrom one control plane. Some advantages are:

- Unified RBAC Policies Across Clouds

- Centrally provisioned workflow

- Consolidated Cluster Health Monitoring

These solutions encapsulate provider-specific information and simplify operations.

GitOps for Configuration Consistency

Use GitOps principles through the implementation of Argo CD or Flux. This facilitates:

- Declarative application and infrastructure management

- Automated configuration synchronization between clusters

- Integrated auditing and rollback using version control

Git becomes the source of truth, streamlining deployment and reducing configuration drift.

Cross-Cloud Networking using Service Mesh

Implement a service mesh like Istio, Linkerd, or Consul to provide secure and consistent communication between services across clouds. Service meshes offer:

- mTLS encryption between services

- Traffic routing and load balancing

- Visibility into service behavior and performance

This is particularly helpful for hybrid and failover configurations.

Policy and Security Enforcement

Use Open Policy Agent (OPA) or Kyverno to implement consistent security policies across clusters. Pair this with:

- HashiCorp Vault for secrets management

- OIDC-compatible identity providers for federated access control

This way, security and compliance don’t rely on the cloud provider. If your organization has a multi-cloud Kubernetes deployment planned or is already running one, review your existing workflows and tools. Are your teams operating within infrastructure silos? A common strategy can provide accelerated deployment, improved performance, and more secure, reliable applications.