Table of Contents Show

Anna was gripped by shock and horror when she stumbled upon a video of herself engaging in a sexual act with a stranger whose advances she had resisted.

We don’t choose the people we cross paths with, and unfortunately, some people we meet don’t have good intentions.

No doubt the face was Anna’s, but the body wasn’t. She couldn’t stop crying. Who would believe her? The images repeatedly replayed in her mind, and a feeling of dread gripped her. She couldn’t go outside without feeling exposed.

In another incident in 2017, a Redditor known as “deepfakes” created a thread featuring fabricated videos depicting celebrities like “Maisie Williams” and “Taylor Swift” engaged in explicit acts.

But even more alarming is that the thread had grown to an astonishing 90,000 subscribers – a highlight of the popularity of such deceptive content.

Welcome to the 21st century, where with a few clicks, a disgruntled individual can manufacture reality to hurt someone they don’t like. The deepfake technology trend is a disturbing and sinister dark side of artificial technology (AI).

Creating deep fake porn doesn’t require special skills or knowledge. Anyone can do it with relatively simple, readily available face-swapping apps. And as the tech advances, the likelihood of deepfakes proliferating seems greater than ever.

The Mechanics of Deepfakes

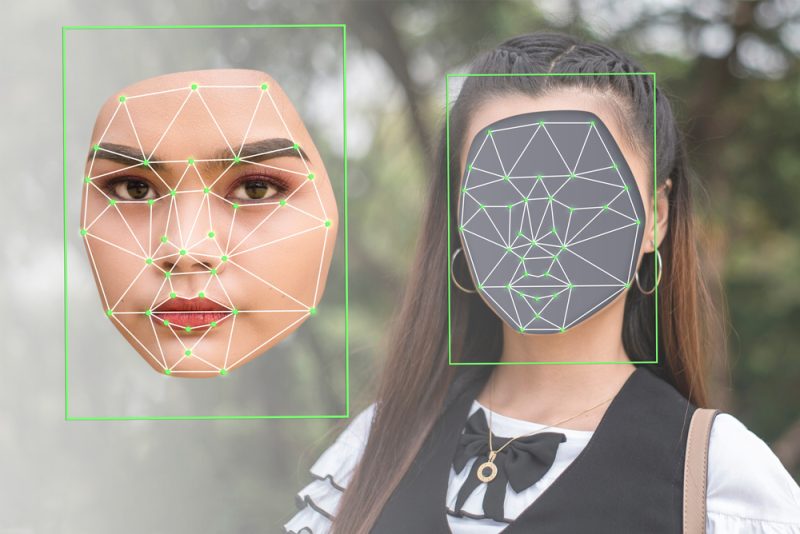

Producing a deepfake involves a sophisticated set of artificial intelligence algorithms. These algos analyze vast amounts of data to understand and replicate facial expressions, features, and movements.

And at the heart of the deepfake creation process is Generative Adversarial Networks (GANs). GANs are responsible for perfecting the technology by pitting a pair of neural networks against each other.

One neural network will generate the fake content while the other will act as a discriminator, constantly improving its deepfake detection ability. The result is an adversarial process that refines the deepfake technology and makes it hard to perceive.

Anna was a victim of face swapping, the most common deepfake technique. With face swapping, a victim’s face is transplanted into the body of another, often seamlessly.

Face swapping has been used to bring beloved or historical characters to life. But the potential for misuse is great, primarily through revenge porn and spreading disinformation.

The Risks and Ethical Implications

Uldouz Wallace, an Iranian actress, fell victim to the 2014 iCloud hack that exposed private photos of numerous celebrities, including Jennifer Lawrence.

Wallace faced the distressing aftermath of having her images used in deepfake pornography.

Later on, while reflecting on the harrowing experience, Wallace described it as a multilayered form of abuse, intensified by the rise of deepfakes.

The spread of fake content has become so rampant that Wallace now finds it challenging to discern what is real and what is fabricated. This is the perfect highlight on the pervasive and disorienting impact of deepfakes in today’s digital landscape.

It’s no longer easy for the average person to tell what is real and what is manipulated. A sad reality, which also erodes trust in digital media. Would Anna and Wallace ever trust anything they see on video or other media?

The potential to spread misinformation and manipulate public opinion is quite significant. For example, a malicious actor can create a convincing audio or video to incite a community against a leader or cause unrest. And, in some cases, even influence elections.

Legal and Regulatory Considerations

Legislation and regulations are struggling to keep up with AI and other technological advancements. Only a few states, like Virginia and California, have taken steps to address deepfakes. That means comprehensive federal protection is lacking, which leaves the vulnerable without legal recourse.

For example, in the case of Anna, her lawyer advised her that she couldn’t sue for defamation. The deepfake porn she fell victim to wasn’t technically revenge porn, which falls under libel, and there are no federal laws against deepfake porn. That means Anna couldn’t sue for defamation.

Efforts such as the Preventing Deepfakes of Intimate Images Act, proposed by Rep. Joseph Morelle (DâN.Y.), aim to combat the spread of disinformation and safeguard individuals from compromising situations online. But legislation and regulations are lagging too much behind the pace of technological growth.

Mitigation Strategies and Future Directions

So, as a society, how do we even begin to combat deepfakes, given the complicated nature of the technology? Experts agree that a multifaceted approach, which starts with awareness, is crucial.

Education on the existence and potential dangers of deepfakes can help reduce their impact on individuals and society. If a community knows that videos can be manipulated, then the chances of social unrest would be minimized.

On the other hand, discerning media consumers would be easier to convince about manipulated content. Essentially, such content is more damaging to the victim when no one believes them.

Collaboration between tech companies and government is vital to stem the vice by developing comprehensive strategies and policies. Establishing ethical guidelines and AI frameworks responsible for AI content creation is also essential.